Code

import graphviz

def gv(s):

return graphviz.Source('digraph G{ rankdir="LR"' + s + '; }')

gv('''model[shape=box3d width=1 height=0.7]

inputs->model->results; weights->model''')January 20, 2021

I’m taking the FastAI course again, as it’s good to keep up with the framework. It’s a neat framework that can produce good results if you can work within it.

Today I’ll be going over the first lesson of the 2020 course. This has some associated notebooks which I think are here.

The first thing to do is to check out the questionnaire. Since I’ve seen this lesson before I can have a go at the questions now and then circle back to them after watching the lesson.

This is the feel good question where all of the answers are “False”. It’s meant to show that deep learning is accessible to anyone.

The Mark I Perceptron.

Processing units, which have inputs, outputs and an activation state. The processing unit takes the inputs to determine the output.

There are connections between the processing units. The activations propagate from the outputs to the inputs through these connections.

There is a learning rule that can alter the pattern of connections between the units.

Finally there is an environment for all of this to exist within.

The fact that a single layer network could not learn XOR lead to the first AI winter. Adding a single additional layer means that a network can learn any function to an arbitrary precision.

The fact that a two layer network is theoretically able to learn anything means it is the best architecture. Deeper networks can learn faster and be cheaper to run.

A GPU is a graphics processing unit. It was originally designed to perform graphical operations for a computer. These are extremely simple operations that can be performed in parallel. So a GPU is optimized for performing many many simple separate operations at once.

The answer, 2, is printed below the cell.

The stripped notebook is available in the fastai/fastbook/clean folder. I’ve run all the cells now.

I’ve filled out more questions in this section from that appendix. There are also two open ended questions.

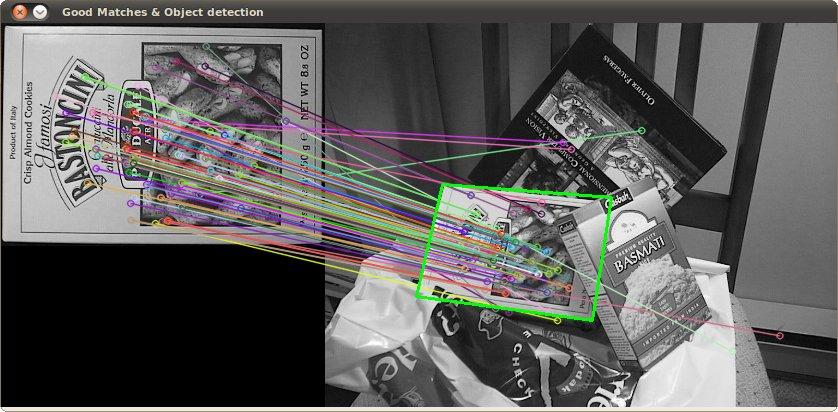

The process used by libraries such as OpenCV is to take lots of photos of something, find features like the corners (join points of lines) and then try to project these to a 3d model. Then you take a photo and find all the corners again, and see if a projection of the 3d model can be mapped to this.

This can be shown with the following image:

There are many problems with this - partial occlusion of the object, a failure to spot some of the features, cooincidental alignment with a different object etc etc. It’s also extremely time consuming to do.

So it takes a long time and has poor performance.

In the lesson Jeremy just says that it is very hard to work out what to do to recognize a cat, and that before deep learning it was impossible. I think a better quote is given from Arthur Samuel by Jeremy that spells it out:

Programming a computer for such computations is, at best, a difficult task, not primarily because of any inherent complexity in the computer itself but, rather, because of the need to spell out every minute step of the process in the most exasperating detail. Computers, as any programmer will tell you, are giant morons, not giant brains.

Arthur Samuel, Artificial Intelligence: A Frontier of Automation (1962)

I think Samuel here is Arther Samuel, who is mentioned at about 49 minutes into the lesson. Apparently he theorized about deep learning in 1962.

The “weight assignment” is referenced in the following passage:

Suppose we arrange for some automatic means of testing the effectiveness of any current weight assignment in terms of actual performance and provide a mechanism for altering the weight assignment so as to maximize the performance. We need not go into the details of such a procedure to see that it could be made entirely automatic and to see that a machine so programmed would “learn” from its experience.

This passage elides over the detail of the weight assignment as it is apparently testable at a task, so the weight assignment in some way contains some essential detail of the program to be performed. Jeremy has made this nice picture to show this. It looks just like a deep learning model:

We call them parameters for a deep learning model.

That’s the one that I just showed above.

A deep learning model is a mathematical operation that can involve millions or billions of different variables. Understanding the ways that these variables interact with each other to produce the answer is very hard. It’s frequently hard enough to understand what well written code with a low number of variables is doing.

The Universal Approximation Theoem.

Data and time.

The predictive policing model can alter the distribution of police in the city. The criminals that commit crimes can be caught by police that are present. If the criminals are evenly distributed throughout the city then areas that have a low number of police will have a lower number of criminals caught compared to an area with a high number of police. If the model only takes the absolute numbers per area then it would assign more police to the areas that already had a high number of police (and vice versa). This would eventually lead to all police being assigned to a limited number of areas and the model would think that all crime was occurring in those areas because they are the only place that criminals are apprehended.

No but the pre trained model is trained on images that are that size. The model has internalized structures at a given scale that usually appear in images of that size. If a much larger or much smaller image is used then the structure detecting parts of the model may be unable to cope with the change in scale.

When training a model on a training set, the model could just learn the correlation between the items in the training set and the correct answers. This is called over fitting, as the model has “fit” itself to the training set but generalizes poorly. The validation set is not used to train the model. Instead it is used to check that the model has not overfit, and when the validation accuracy starts to go down training should stop. However the validation set is now, indirectly, used to train the model - it represents the stopping condition. The test set is only used on the final model produced after all training has concluded. The model is then checked against the test set and the reported accuracy is the best guess at the accuracy of the model in production. It is very important that the test set is not used to make decisions that can feed back into the training loop.

It will automatically select a set of values from the training set. Usually 20% of the training set is held out for validation.

No. If you have time series data and randomly select points then you can train with data that includes or correlates with the held out data, making it much easier to solve the validation data. In this example splitting the dataset so that the “future” is the validation set would be a more representative test.

Overfitting is when the model has learned the mapping between each entry in the training data and the correct answer for that entry. The problem with this is that when presented with an unseen entry, like from the validation data set, the performance of the model is poor. It generalizes badly.

There have been cases where teams of white men have trained models which are very good at working for white men. When women of colour use these same systems they perform worse. In a way that is over fitting to the characteristics of white men - or it is a case that the training set was not diverse enough.

A metric is a measurement that describes the quality of the model. It is meant to be more interpretable by humans than the loss value.

The loss is intended to guide the model to produce a more efficient model for the task at hand. This means that the loss can have factors which do not directly come from the accuracy of the model. One example of such a factor would be weight decay - where the loss value grows if the total magnitude of the weights in the model is large. Weight decay is not related to the answers that the model produces and yet it can have a large influence on the model loss.

A problem may only have a small dataset available. When there is a small dataset it is not possible to train a model from scratch as it would be too specific to that dataset. So instead it would be better to train a base model on a similar task that has a larger dataset, and then refine the base model for the original task.

This is called transfer learning, and it works well. The model that is used as the base model does not have to be trained by the practitioners that are trying to solve the current problem. Instead the model can be trained and then provided for use by others. Such a model is called a pretrained model.

A model consists of many layers. The majority of layers are concerned with extracting features from the data. The final layers take those features and turn them into a result.

The layers that extract features are collectively called the body, and the final layers that produce the result are called the head.

The early layers of a CNN find things like colour gradients and lines. The subsequent layers combine these features repeatedly to detect far more complex things such as eyes or textures or writing.

If you can represent the data in a “photo like” way then a CNN can be useful for working with it. CNNs have been used to process sound - e.g. determining if a sound is a gunshot. They have also been used to track mouse movements to spot fraud.

The architecture of a model is the specific choice and arrangement of layers that make up the model.

Segmentation is the process of taking an image and determining which class each pixel belongs to. For example a street scene can be segmented into “sky”, “building”, “pedestrian”, “car”, “road”… etc.

Hyperparameters are settings that are used to alter the training of the model that are not the input to the model. For example the degree to which the weights are updated in response to a given loss value.

Know the difference between what you are measuring and what you actually want - in the predictive policing example the measurement was of arrests, but the desire was actually to measure crime.

Carefully roll out the use of any model. Have a time and location limited test with heavy human supervision, or just have the model running alongside a human who is doing the job. Monitor the model to ensure that it is performing the job well. Have a way to abort the rollout if the model is performing poorly.

A successful rollout is not the end. The model may perform well to start with and become worse over time. Make sure the performance is regularly validated.

Always have a way to involve a human to prevent run away automated decision making.

So I’ve watched all of the lesson and answered the questions as well as I can. There are just two that I don’t have answers to.

I think it would be helpful to actually run each of the training types that Jeremy mentions at the end of the lesson and then try to predict an outcome with the trained model.