Code

from fastai.vision.all import *March 7, 2021

Neural network models are difficult to understand. When a network makes a prediction - why did it choose that specific prediction? Is the network biased in some way? Is it ignoring relevant information?

The ability to interpret the behaviour of models is important. Approaches like Random Forests or Decision Trees allow interpretation by looking at the features that they use for classification. A neural network takes inputs and combines them in complex ways. Looking directly at the structure of the network does not show why it is making a decision.

In a recent workshop the LIME tool (Local Interpretable Model-Agnostic Explanations) was demonstrated. This explores the way that a model classifies by changing the input and observing the changing output. The LIME tool can then present the impact of the different features on the prediction.

I’m interested in exploring how LIME works and what conclusions can be drawn from it. This post is an exploration of LIME.

The first thing to do is to get a dataset and a model and see if I can get LIME to make some predictions about it. LIME can work with:

Since the workshop covered tabular data already I’m inclined to use an image classifier. It’s quite easy to get a model that is pretrained for resnet.

model = models.resnet18(pretrained=True)

model.eval()

def open_image(path: Path, size: int = 224) -> Image.Image:

image = Image.open(path)

image = image.convert('RGB')

image = image.resize((size, size))

return image

def to_array(image: Image.Image) -> np.array:

array = np.array(image, dtype="float")

array = array / 255.0

array = array - 0.5

array = array * 2

return array

def to_tensor(array: np.array) -> torch.Tensor:

tensor = torch.Tensor(array)

if len(array.shape) == 3:

tensor = tensor[None, :]

return tensor.permute(0, 3, 1, 2)

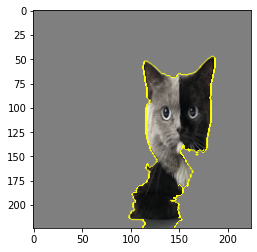

cat_image = open_image(Path("cat.jpg"))

cat_array = to_array(cat_image)

cat_image

array([285, 284, 281, 478, 457])I can decode this using the imagenet labels (see a json file here).

Egyptian Mau: 73.68%

Siamese cat: 6.75%

tabby cat: 6.02%

carton: 2.56%

bow tie: 0.99%An Egyptian Mau is a specific breed of cat, so I’ll take it. Lets try running LIME over this. I’m following the LIME basic image notebook.

CPU times: user 1min 18s, sys: 3.17 s, total: 1min 21s

Wall time: 8.82 s

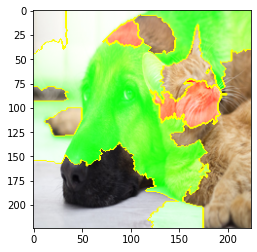

We can also see what detracts from a specific label.

This doesn’t have anything that detracts from the prediction because it really just is a cat. Let’s find a more ambiguous image.

German Shepherd Dog: 70.01% (235)

Malinois: 10.23% (225)

Bloodhound: 2.36% (163)

Chesapeake Bay Retriever: 1.97% (209)

Leonberger: 1.63% (255)

lion: 1.55% (291)

dingo: 1.19% (273)

Afghan Hound: 0.97% (160)

Australian Kelpie: 0.81% (227)

Great Dane: 0.61% (246)

cougar: 0.60% (286)

Irish Wolfhound: 0.46% (170)

tabby cat: 0.35% (281)

tiger cat: 0.34% (282)

Egyptian Mau: 0.33% (285)

Golden Retriever: 0.28% (207)

Norwegian Elkhound: 0.26% (174)

Basset Hound: 0.26% (161)

grey wolf: 0.24% (269)

Alaskan Malamute: 0.21% (249)

CPU times: user 1min 17s, sys: 4.33 s, total: 1min 21s

Wall time: 8.65 s

It mainly seems pretty happy with the dog prediction. The muzzle of the cat is the detractor, and I guess that is the most distinctively non doggy.

Overall I think this evaluation went well. It was quite tricky to format the data in a way that LIME accepts. I think the problem there is the requirement that LIME understand the data enough to manipulate it effectively.