Code

! kaggleusage: kaggle [-h] [-v] {competitions,c,datasets,d,kernels,k,config} ...

kaggle: error: the following arguments are required: commandMarch 8, 2021

I’m interested in managing a kaggle competition submission from the cli with git. I like Kaggle and want to make more submissions, I just don’t like editing files through the web interface. It would work much better for me if I could manage files in a project and then upload or download them to Kaggle via the CLI.

This post is an exploration of doing exactly that. I use the kaggle cli a lot and that produces verbose text output. The text is poorly formatted in this post, sorry about that.

The Kaggle CLI is a python command line client for interacting with Kaggle. It is maintained by Kaggle and appears quite comprehensive. I’m confident that what I want to do will be supported.

The first thing that I should do is explore the CLI a bit.

usage: kaggle [-h] [-v] {competitions,c,datasets,d,kernels,k,config} ...

kaggle: error: the following arguments are required: commandusage: kaggle [-h] [-v] {competitions,c,datasets,d,kernels,k,config} ...

optional arguments:

-h, --help show this help message and exit

-v, --version show program's version number and exit

commands:

{competitions,c,datasets,d,kernels,k,config}

Use one of:

competitions {list, files, download, submit, submissions, leaderboard}

datasets {list, files, download, create, version, init, metadata, status}

config {view, set, unset}

competitions (c) Commands related to Kaggle competitions

datasets (d) Commands related to Kaggle datasets

kernels (k) Commands related to Kaggle kernels

config Configuration settingsThis is encouraging, as it’s easy to explore the kaggle command in this notebook.

I happen to know that the competition submission is managed by kernels, so lets look at them a bit more.

usage: kaggle kernels [-h] {list,init,push,pull,output,status} ...

optional arguments:

-h, --help show this help message and exit

commands:

{list,init,push,pull,output,status}

list List available kernels. By default, shows 20 results sorted by hotness

init Initialize metadata file for a kernel

push Push new code to a kernel and run the kernel

pull Pull down code from a kernel

output Get data output from the latest kernel run

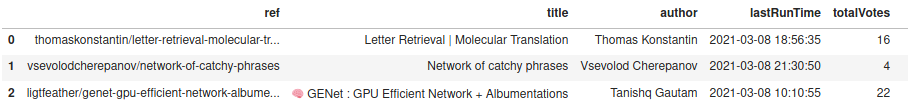

status Display the status of the latest kernel runref title author lastRunTime totalVotes

------------------------------------------------------- ------------------------------------------------ ------------------- ------------------- ----------

ligtfeather/genet-gpu-efficient-network-albumentations 🧠 GENet : GPU Efficient Network + Albumentations Tanishq Gautam 2021-03-08 10:10:55 20

ks2019/handy-rl-training-notebook Handy RL Training Notebook KS 2021-03-08 16:30:54 13

tunguz/tps-mar-2021-stacker TPS Mar 2021 Stacker Bojan Tunguz 2021-03-08 16:52:23 3

thomaskonstantin/letter-retrieval-molecular-translation Letter Retrieval | Molecular Translation Thomas Konstantin 2021-03-08 18:56:35 10

shikhar07/basic-eda-and-classification-using-knn Basic EDA and Classification using KNN Shikhar Sharma 2021-03-08 17:39:14 2

chandramoulinaidu/spam-classifier-multinomialnb Spam Classifier(MultinomialNB) Chandramouli Naidu 2021-03-08 17:39:48 2

kugane/classical-algolithm-approach-mtm-lsd-hough Classical algolithm approach (MTM/LSD/Hough) nibuiro 2021-03-08 17:06:58 2

sureshmecad/netflix-moviews-tv-shows Netflix moviews & TV Shows A SURESH 2021-03-08 16:56:49 21

khanrahim/fake-news-classification-easiest-99-accuracy Fake News Classification (Easiest 99% accuracy) Mohammad Rahim Khan 2021-03-08 07:03:46 15

fish0731/netflix-data-visualization-analysis Netflix Data Visualization & Analysis fish0731 2021-03-08 15:53:14 4

daidi06/titanic-ensemble-learning Titanic Ensemble Learning Didier ILBOUDO 2021-03-08 17:41:00 6

subinium/matplotlib-figure-for-lecture [Matplotlib] Figure for Lecture Subin An 2021-03-08 09:05:28 35

heyytanay/in-depth-eda-meta-features-testing In-depth EDA + Meta-Features + Testing! Tanay Mehta 2021-03-08 12:30:06 4

stutisehgal/data-science-diabetes-prediction Data Science-Diabetes Prediction stuti sehgal 2021-03-08 15:42:13 2

markusdegen/titanic-naive-bayes titanic_naive_bayes Markus Degen 2021-03-08 19:32:36 9

ruchi798/mmlm-2021-ncaaw-spread MMLM 2021 - NCAAW - Spread Ruchi Bhatia 2021-03-08 17:25:19 14

namanjeetsingh/customerchurn-using-neural-networks CustomerChurn Using Neural Networks Namanjeet Singh 2021-03-08 13:05:49 3

ivangavrilove88/acc-0-999-feature-engineering ACC = 0.999 (FEATURE ENGINEERING) Ivan Gavrilov 2021-03-08 18:09:07 1

dazzpool/tps-march-2021 TPS March 2021 ✨ Rohan kumawat 2021-03-08 17:57:31 1

jaysueno/titanic-comp titanic_comp Jay Sueno 2021-03-08 09:41:54 37 I wonder if I can turn this into a dataframe for easier viewing.

So this is nice enough. What I need to be able to work with this is my kernels.

usage: kaggle kernels list [-h] [-m] [-p PAGE] [--page-size PAGE_SIZE]

[-s SEARCH] [-v] [--parent PARENT]

[--competition COMPETITION] [--dataset DATASET]

[--user USER] [--language LANGUAGE]

[--kernel-type KERNEL_TYPE]

[--output-type OUTPUT_TYPE] [--sort-by SORT_BY]

optional arguments:

-h, --help show this help message and exit

-m, --mine Display only my items

-p PAGE, --page PAGE Page number for results paging. Page size is 20 by default

--page-size PAGE_SIZE

Number of items to show on a page. Default size is 20, max is 100

-s SEARCH, --search SEARCH

Term(s) to search for

-v, --csv Print results in CSV format (if not set print in table format)

--parent PARENT Find children of the specified parent kernel

--competition COMPETITION

Find kernels for a given competition slug

--dataset DATASET Find kernels for a given dataset slug. Format is {username/dataset-slug}

--user USER Find kernels created by a given username

--language LANGUAGE Specify the language the kernel is written in. Default is 'all'. Valid options are 'all', 'python', 'r', 'sqlite', and 'julia'

--kernel-type KERNEL_TYPE

Specify the type of kernel. Default is 'all'. Valid options are 'all', 'script', and 'notebook'

--output-type OUTPUT_TYPE

Search for specific kernel output types. Default is 'all'. Valid options are 'all', 'visualizations', and 'data'

--sort-by SORT_BY Sort list results. Default is 'hotness'. Valid options are 'hotness', 'commentCount', 'dateCreated', 'dateRun', 'relevance', 'scoreAscending', 'scoreDescending', 'viewCount', and 'voteCount'. 'relevance' is only applicable if a search term is specified.ref title author lastRunTime totalVotes

-------------------------------------- ---------------------- ---------------- ------------------- ----------

matthewfranglen/256x256x4-images 256x256x4 images Matthew Franglen 2021-02-11 08:42:18 0

matthewfranglen/hubmap-fast-ai-starter HuBMAP fast.ai starter Matthew Franglen 2021-02-16 20:57:52 0 Both of these are copies of existing notebooks that have kindly been provided by community members. Let’s see if I can download the notebooks and upload a script associated with this competition.

usage: kaggle kernels pull [-h] [-p PATH] [-w] [-m] [kernel]

optional arguments:

-h, --help show this help message and exit

kernel Kernel URL suffix in format <owner>/<kernel-name> (use "kaggle kernels list" to show options)

-p PATH, --path PATH Folder where file(s) will be downloaded, defaults to current working directory

-w, --wp Download files to current working path

-m, --metadata Generate metadata when pulling kernelSource code downloaded to /home/matthew/Programming/Blog/blog/notebooks/256x256x4-images.ipynbSo that worked and there is now a notebook in this directory.

The metadata for the kernel should show this.

Source code and metadata downloaded to /home/matthew/Programming/Blog/blog/notebooksThe metadata is:

{

"id": "matthewfranglen/256x256x4-images",

"id_no": 14677191,

"title": "256x256x4 images",

"code_file": "256x256x4-images.ipynb",

"language": "python",

"kernel_type": "notebook",

"is_private": true,

"enable_gpu": false,

"enable_internet": true,

"keywords": [

"art"

],

"dataset_sources": [],

"kernel_sources": [],

"competition_sources": [

"hubmap-kidney-segmentation"

]

}So you can see that it is private. This is a good metadata example for creating a new kernel.

The next thing to check out is creating a kernel associated with the competition and uploading a script.

usage: kaggle kernels init [-h] [-p FOLDER]

optional arguments:

-h, --help show this help message and exit

-p FOLDER, --path FOLDER

Folder for upload, containing data files and a special kernel-metadata.json file (https://github.com/Kaggle/kaggle-api/wiki/Kernel-Metadata). Defaults to current working directorySo now I need to come up with some metadata and a script. The script can be very easy, as I just want to establish that the kernel is created and run.

import tempfile

import json

from pathlib import Path

with tempfile.TemporaryDirectory() as directory:

metadata_file = Path(directory) / "kernel-metadata.json"

metadata_file.write_text(json.dumps({

"id": "matthewfranglen/test-kernel",

"title": "test-kernel",

"code_file": "test.py",

"language": "python",

"kernel_type": "script",

"is_private": True,

"enable_gpu": False,

"enable_internet": False,

}))

script_file = Path(directory) / "test.py"

script_file.write_text("""

from pathlib import Path

Path("/kaggle/working/output.txt").write_text("hello, world!")

""")

response = %sx ! kaggle kernels init --path {directory}

print(response)

print(metadata_file.read_text())['Kernel metadata template written to: /tmp/tmpwnwsn320/kernel-metadata.json']

{

"id": "matthewfranglen/INSERT_KERNEL_SLUG_HERE",

"title": "INSERT_TITLE_HERE",

"code_file": "INSERT_CODE_FILE_PATH_HERE",

"language": "Pick one of: {python,r,rmarkdown}",

"kernel_type": "Pick one of: {script,notebook}",

"is_private": "true",

"enable_gpu": "false",

"enable_internet": "true",

"dataset_sources": [],

"competition_sources": [],

"kernel_sources": []

}This is not creating the kernel.

It looks like the kaggle kernels init command just creates a sample metadata file. I also don’t see a way to associate it with the competition. I’m going to add the competition to the competition_sources to try to associate it with the competition.

with tempfile.TemporaryDirectory() as directory:

metadata_file = Path(directory) / "kernel-metadata.json"

metadata_file.write_text(json.dumps({

"id": "matthewfranglen/test-kernel",

"title": "test-kernel",

"code_file": "test.py",

"language": "python",

"kernel_type": "script",

"is_private": True,

"enable_gpu": False,

"enable_internet": False,

"competition_sources": ["hubmap-kidney-segmentation"],

}))

script_file = Path(directory) / "test.py"

script_file.write_text("""

from pathlib import Path

Path("/kaggle/working/output.txt").write_text("hello, world!")

""")

response = %sx ! kaggle kernels push --path {directory}

print(response)['Kernel version 1 successfully pushed. Please check progress at https://www.kaggle.com/matthewfranglen/test-kernel']This time it worked! The output file was created and contained the expected text.

ref title author lastRunTime totalVotes

-------------------------------------- ---------------------- ---------------- ------------------- ----------

matthewfranglen/256x256x4-images 256x256x4 images Matthew Franglen 2021-03-09 02:02:06 0

matthewfranglen/test-kernel test-kernel Matthew Franglen 2021-03-09 02:11:25 0

matthewfranglen/hubmap-fast-ai-starter HuBMAP fast.ai starter Matthew Franglen 2021-02-16 20:57:52 0 It has also associated it with the competition. That’s neat.

Lets try changing it.

with tempfile.TemporaryDirectory() as directory:

metadata_file = Path(directory) / "kernel-metadata.json"

metadata_file.write_text(json.dumps({

"id": "matthewfranglen/test-kernel",

"title": "test-kernel",

"code_file": "test.py",

"language": "python",

"kernel_type": "script",

"is_private": True,

"enable_gpu": False,

"enable_internet": False,

"competition_sources": ["hubmap-kidney-segmentation"],

}))

script_file = Path(directory) / "test.py"

script_file.write_text("""

from pathlib import Path

Path("/kaggle/working/output.txt").write_text("goodbye cruel world!")

""")

response = %sx ! kaggle kernels push --path {directory}

print(response)['Kernel version 2 successfully pushed. Please check progress at https://www.kaggle.com/matthewfranglen/test-kernel']Once again it has worked!

This actually seems quite straightforward.

As far as integrating this with git - I don’t think it’s possible. The kaggle API cannot reasonably be added as a remote as it has rather different behaviour. While a push hook could be used to keep the Kaggle kernel up to date, I think I would rather use a make target to manually update it.

If only there was a way to handle multiple files in a kernel, then working with Kaggle would integrate much more tightly with my existing workflow. Perhaps I can create an auto script smasher that puts all of the different files into one? Something for another day I think.